Fitbit had a big product launch about three months after I started working there. It was the first smartwatch they made (the Ionic!) and all eyes in the company were on the data coming in during the first days out in the market. My team was on the hook for building the analytics tools that our stakeholders used to measure success of the product launch and make quick decisions once it was out in the market. And with a small team of five analysts to support the entire product arm for the launch, it was a serious all-hands-on-deck situation.

The analytics team was heroic and scrappy, and the launch was a success. In the aftermath though, I could tell that we were not only exhausted, but that we hadn’t always made the best use of our time; a killer realization to have when your team is already so resource-constrained to begin with.

For starters, several of the pages we built for the launch had never been used or even looked at, at least according to the Tableau dashboard analytics. We had stakeholders from Product Managers responsible for individual hardware and software features all the way up to the CEO, and my team was extremely service-oriented, ready to say yes to whatever requests came in. But we often fell into the trap of giving our stakeholders what they said they wanted (often on a whim)1 instead of what they actually needed.

Since then, I’ve found that the most successful internal data tools have been developed as though they were products themselves, keeping the users and their specific use cases always top of mind. Intentionally avoiding the overloaded term “data product” here, by “data tool” I mean anything built by the data team that non-data people at the company use; for example, a dashboard, an experimentation (A/B test) platform, a knowledge repository app to retrieve previous analysis results, or even a simple wiki that links out to common dashboards and reports.

Here are some product development practices I’ve found have been extremely helpful when developing self-service data tools for stakeholders:

Put yourself in the shoes of your users. As the developer of a self-serve data tool, you won’t always be there when the stakeholder uses it. As the old adage says, if they can find a way to misuse it, they will. This is especially harrowing for data practitioners, as misinterpreted data can cause more problems than if the data never existed in the first place. Predicting how data might be interpreted or where it can easily be misunderstood takes a lot of conscious thought up front.

Do user research. Never assume that you truly understand your stakeholders without talking to them. Conduct interviews with stakeholders ahead of time, and survey their data aptitude-levels. Understand deeply what their data needs are and the “why” behind their requests, rather than taking requests at face value. Once implemented, watch them use what you’ve built and pay attention to which interactions are intuitive and where they hit sticking points.

Balance user requests with actual needs. There is a fine line between “user-centered design” and innovative “design thinking”, and the same goes for data tool development. Get user feedback early and often, but also learn how to read between the lines and deliver an experience they may not even have known they needed (or knew to ask for). As the data expert, take a step back and think about the real ROI of the features they are requesting before kicking off the work.

Create a Product Requirements Document. Early in the process, get a physical plan together that outlines exactly what the stakeholders need and how you intend to build it, and then share it with your stakeholders for sign-off. This is extremely helpful to avoid scope creep later in the process, and makes sure the needs are well articulated before work really kicks up to high gear.

Make a roadmap. How can you tell if you are hitting milestone deadlines or letting them slip? Checking in every week with an artifact like a roadmap helps make sure progress is on track, and is a great communication tool to set the right expectations for timelines. Project management tracking through agile or waterfall practices are also helpful for keeping the communication flowing.

Always be scaling. Why build a data tool for one stakeholder when more people can use the same platform? It isn’t always a good idea to try to solve too many problems at once, but building bespoke, “artisanal” tools just isn’t scalable. It always helps to ask yourself who else may benefit from the platform you are creating, and to address those users and use cases one way or another in your scope and roadmap.

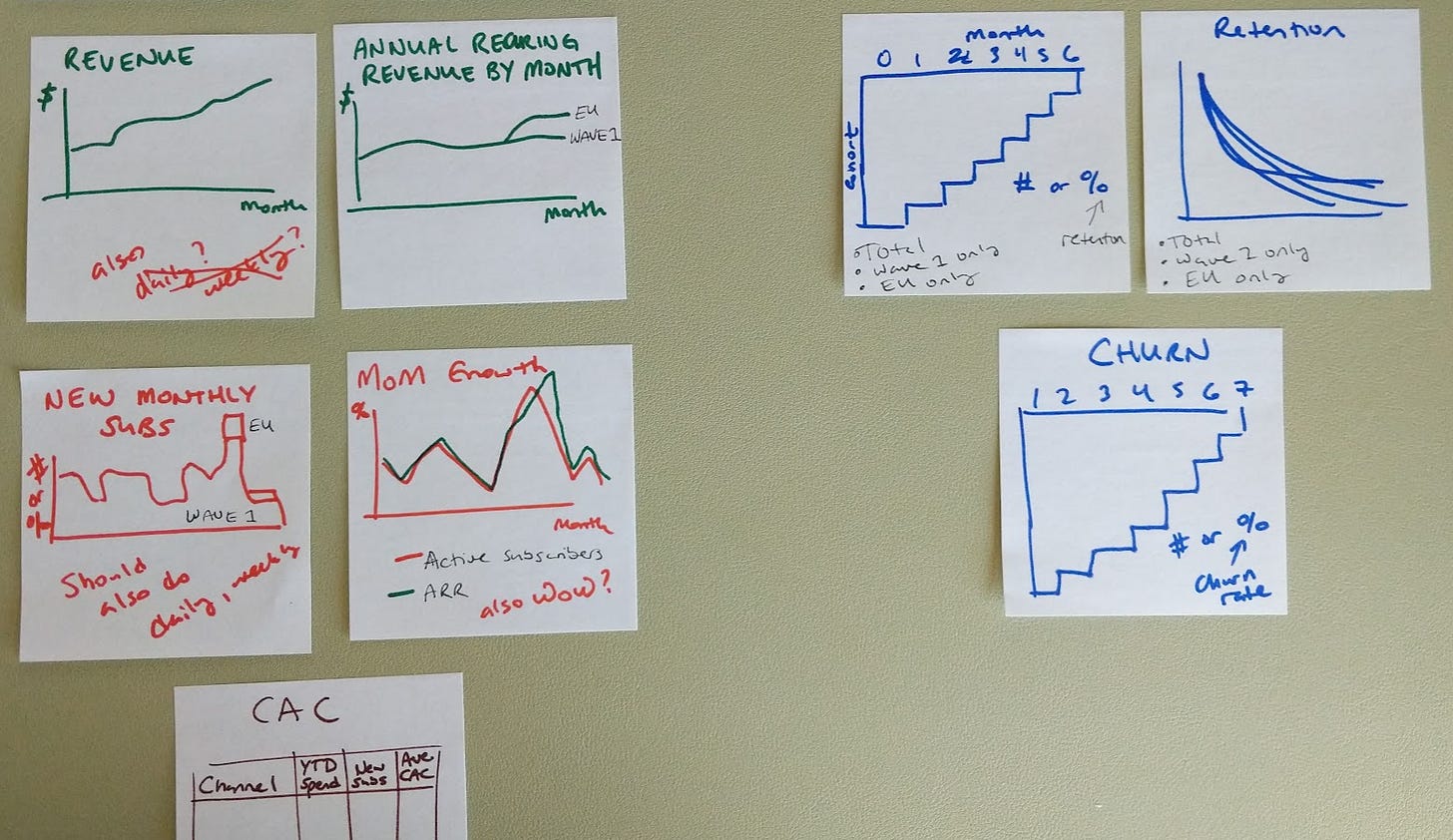

Sketch out the design. A little UX design ahead of time goes a long way to communicate what you intend to build for your stakeholders, especially when it comes to mock data visualizations. Data practitioners often want to “build as they go”, but the original ask can get lost when compromising for ease of development. I’ve also found that data practitioners have a tendency to find the most complete and elegant solution, forgetting that not all stakeholders may understand how to read the data as easily as the data experts. A quick check with a little sketch of the intended tool usage or data visualization can help see if the design is as intuitive as you think.

Build an MVP. What is the simplest thing you can put in front of a stakeholder? If you focus on building out the perfect tool before showing it to anyone, months can go by and the tool may not be so perfect any more. Be conscious of what the core of the data tool is meant to do, and do only that for the first pass, leaving all the edge cases out. Sometimes this merely means linking different data sources together with a simple front-end to demonstrate how it works end-to-end. If an MVP takes more than a few concentrated days to put together, then it isn’t an MVP. Challenge yourself or your team to a hackathon-style week and see what comes out.

Create a marketing plan. If no one knows that the tool exists, they won’t use it. Will you send an email with a link, or do a live demo? Will you show them the features of the tool all at once to get them started, or show them ongoing “pro tips” over the course of a few days or weeks? How will they find out about new features when they are added?

Beta test with a few friendly stakeholders who can learn the tool early and evangelize it. Not only does it help create a quick feedback loop back into the development, they will become advocates for the tool among their peers and help with the acquisition and onboarding of more internal users. Always be scaling!

Have KPIs to assess success. Is just opening up the data tool enough, or are there certain user interactions with it that you want to track? It also may be worth doing more user interviews or surveys to assess sentiment and understand whether or not they find your tool useful. Ultimately analytics tools are meant to help the business make decisions. A great question to ask your analytics tool users is “were you easily able to answer to the question you were looking for?”

Plan for Customer Support. No one likes a broken tool, and once that trust in reliability is gone, stakeholders will stop wanting to use it. Creating a strategy for maintenance, such as ETL outages, and deciding who will be responsible for fixes and feature updates will ensure the long-term health and usage of the tool. Set up an internal messaging channel or email list for questions, and have tutorials, links, FAQs etc. located in a central, easily discoverable place.

If these tips sound like a lot of work, they don’t have to be. At the very least, these Product Management techniques are good to keep in the back of your mind, and any of them can scale up or down with the scope of the data tool you are building. For example, a Product Requirements Document could be several pages long if it’s for a huge, months-long project (like building out an experimentation platform), or can be stripped down to a one-pager for a simple report that you want to try to get right on the first try. Either way, the goal is to communicate with stakeholders, set the right expectations, and build something that ultimately helps them leverage data for decisions. Stay tuned for future posts where I’ll share some templates that can make these practices quick and easy!

Opinions expressed here are my own and do not express the views or opinions of my past employers.

A real conversation I had:

PM: “Can you get this data for me into a dashboard?”

Me, knowing this would be a non-straightforward, manual task: “Ok! What will you use it for?”

PM: “Oh, nothing in particular, I was just curious!”

Me: [sigh.]

Heard the same conversation. 😅